The world around us is in constant motion — people walk, animals move, objects deform. Capturing and understanding such dynamic scenes in 3D has long been a challenge in computer vision and graphics. Recently, Neural Radiance Fields (NeRF) revolutionized static 3D scene reconstruction and novel view synthesis, but handling dynamic, deformable objects remains a tough nut to crack.

A new research paper titled «Neural Radiance Fields for Dynamic Scenes with Deformable Objects» (arXiv:2506.10980) proposes an innovative approach to extend NeRF’s capabilities to dynamic environments. This blog post breaks down the core ideas, methods, and potential applications of this exciting development.

What Are Neural Radiance Fields (NeRF)?

Before diving into the dynamic extension, let’s quickly recap what NeRF is:

- NeRF is a deep learning framework that represents a 3D scene as a continuous volumetric radiance field.

- Given a set of images from different viewpoints, NeRF learns to predict color and density at any 3D point, enabling photorealistic rendering of novel views.

- It excels at static scenes but struggles with dynamic content due to its assumption of a fixed scene.

The Challenge: Dynamic Scenes with Deformable Objects

Real-world scenes often contain moving and deforming objects — think of a dancing person or a waving flag. Modeling such scenes requires:

- Capturing time-varying geometry and appearance.

- Handling non-rigid deformations, where objects change shape over time.

- Maintaining high-quality rendering from arbitrary viewpoints at any time frame.

Traditional NeRF methods fall short because they assume static geometry and appearance.

The Proposed Solution: Dynamic NeRF for Deformable Objects

The authors propose a novel framework that extends NeRF to handle dynamic scenes with deformable objects by combining:

- Deformation Fields:

They introduce a learnable deformation field that maps points in the dynamic scene at any time to a canonical (reference) space. This canonical space represents the object in a neutral, undeformed state. - Canonical Radiance Field:

Instead of modeling the scene directly at each time step, the system learns a canonical radiance field representing the object’s appearance and geometry in the canonical space. - Time-Dependent Warping:

For each timestamp, the model predicts how points move from the canonical space to their deformed positions in the dynamic scene, enabling it to reconstruct the scene at any moment.

How Does It Work?

The approach can be summarized in three main steps:

1. Learning the Canonical Space

- The model first learns a canonical 3D representation of the object or scene in a neutral pose.

- This representation encodes the geometry and appearance without deformation.

2. Modeling Deformations Over Time

- A deformation network predicts how each point in the canonical space moves to its position at any given time.

- This captures complex non-rigid motions like bending, stretching, or twisting.

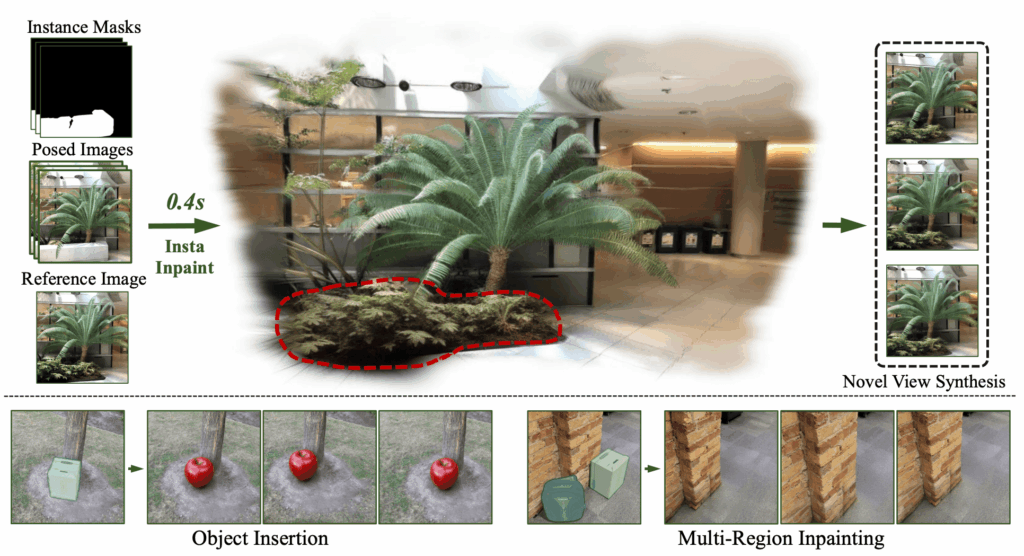

3. Rendering Novel Views Dynamically

- Given a camera viewpoint and time, the model:

- Maps the query 3D points from the dynamic space back to the canonical space using the inverse deformation.

- Queries the canonical radiance field to get color and density.

- Uses volume rendering to synthesize the final image.

This pipeline enables rendering photorealistic images of the scene from new viewpoints and times, effectively animating the deformable object.

Key Innovations and Advantages

- Unified Representation: The canonical space plus deformation fields provide a compact and flexible way to model dynamic scenes without needing explicit mesh tracking or complex rigging.

- Generalization: The model can handle a wide variety of deformations, making it applicable to humans, animals, and other non-rigid objects.

- High Fidelity: By building on NeRF’s volumetric rendering, the approach produces detailed and realistic images.

- Temporal Coherence: The deformation fields ensure smooth transitions over time, avoiding flickering or artifacts common in dynamic scene reconstruction.

Potential Applications

This breakthrough opens doors to numerous exciting applications:

- Virtual Reality and Gaming: Realistic dynamic avatars and environments that respond naturally to user interaction.

- Film and Animation: Easier capture and rendering of complex deforming characters without manual rigging.

- Robotics and Autonomous Systems: Better understanding of dynamic environments for navigation and interaction.

- Medical Imaging: Modeling deformable anatomical structures over time, such as heartbeats or breathing.

- Sports Analysis: Reconstructing athletes’ movements in 3D for training and performance evaluation.

Challenges and Future Directions

While promising, the method faces some limitations:

- Computational Cost: Training and rendering can be resource-intensive, limiting real-time applications.

- Data Requirements: High-quality multi-view video data is needed for training, which may not always be available.

- Complex Scenes: Handling multiple interacting deformable objects or large-scale scenes remains challenging.

Future research may focus on:

- Improving efficiency for real-time dynamic scene rendering.

- Extending to multi-object and multi-person scenarios.

- Combining with semantic understanding for richer scene interpretation.

Summary: A Leap Forward in Dynamic 3D Scene Modeling

The work on Neural Radiance Fields for dynamic scenes with deformable objects represents a significant leap in 3D vision and graphics. By elegantly combining canonical radiance fields with learnable deformation mappings, this approach overcomes the static limitations of traditional NeRFs and unlocks the potential to capture and render complex, non-rigid motions with high realism.

For AI enthusiasts, computer vision researchers, and developers working on immersive technologies, this research offers a powerful tool to bring dynamic 3D worlds to life.

If you’re interested in exploring the technical details, the full paper is available on arXiv: https://arxiv.org/pdf/2506.10980.pdf.

Feel free to reach out if you’d like a deeper dive into the methodology or potential integrations with your projects!