Diagnosing faults in large and complex Cyber-Physical Systems (CPSs) like manufacturing plants, water treatment facilities, or space stations is notoriously challenging. Traditional diagnostic methods often require detailed system models or extensive labeled fault data, which are costly and sometimes impossible to obtain. A recent study by Steude et al. proposes a novel data-driven diagnostic approach that works effectively with minimal prior knowledge, relying only on basic subsystem relationships and nominal operation data.

In this blog post, we’ll break down their innovative methodology, key insights, and experimental results, highlighting how this approach can transform fault diagnosis in large CPSs.

The Challenge of Diagnosing Large CPSs

- Complexity and scale: Modern CPSs consist of numerous interconnected subsystems, sensors, and actuators generating vast amounts of data.

- Limited prior knowledge: Detailed system models or comprehensive fault labels are often unavailable or incomplete.

- Traditional methods’ limitations:

- Supervised learning requires labeled faults, which are expensive and error-prone to obtain.

- Symbolic and model-based diagnosis demands precise system models, which are hard to build and maintain.

- Existing approaches struggle to detect unforeseen or novel faults.

Research Questions Guiding the Study

The authors focus on two main questions:

- RQ1: Can we generate meaningful symptoms for diagnosis by enhancing data-driven anomaly detection with minimal prior knowledge (like subsystem structure)?

- RQ2: Can we identify the faulty subsystems causing system failures using these symptoms without heavy modeling efforts?

Core Idea: Leveraging Minimal Prior Knowledge

The approach requires only three inputs:

- Nominal operation data: Time series sensor measurements during normal system behavior.

- Subsystem-signals map: A mapping that associates each subsystem with its relevant sensors.

- Causal subsystem graph: A directed graph representing causal fault propagation paths between subsystems (e.g., a faulty pump causing anomalies in connected valves).

This minimal prior knowledge is often available or can be derived with limited effort in practice.

Method Overview

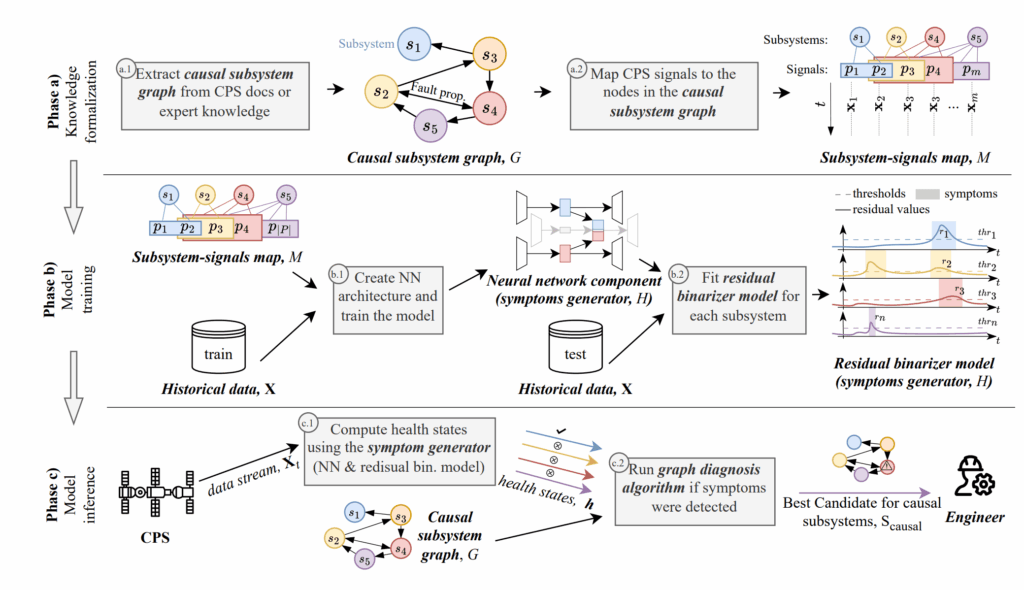

The diagnostic process consists of three main phases:

1. Knowledge Formalization

- Extract the causal subsystem graph from system documentation or expert knowledge.

- Map sensor signals to corresponding subsystems, establishing the subsystem-signals map.

2. Model Training

- Train a neural network-based symptom generator that performs anomaly detection at the subsystem level by analyzing sensor data.

- Fit a residual binarizer model per subsystem to convert continuous anomaly scores into binary symptoms indicating abnormal behavior.

3. Model Inference and Diagnosis

- Continuously monitor system data streams.

- Generate subsystem-level health states (symptoms) using the trained neural network and binarizer.

- Run a graph-based diagnosis algorithm that uses the causal subsystem graph and detected symptoms to identify the minimal set of causal subsystems responsible for the observed anomalies.

Why Subsystem-Level Diagnosis?

- Bridging granularity: Instead of analyzing individual sensors (too fine-grained) or the entire system (too coarse), focusing on subsystems balances interpretability and scalability.

- Modular anomaly detection: Neural networks specialized per subsystem can better capture local patterns.

- Causal reasoning: The causal subsystem graph enables tracing fault propagation paths, improving root cause identification.

Key Contributions

- Demonstrated that structure-informed deep learning models can generate meaningful symptoms at the subsystem level.

- Developed a novel graph diagnosis algorithm leveraging minimal causal information to pinpoint root causes efficiently.

- Provided a systematic evaluation on both simulated and real-world datasets, showing strong diagnostic performance with minimal prior knowledge.

Experimental Highlights

Simulated Hydraulic System

- The system comprises subsystems like pumps, valves, tanks, and cylinders interconnected causally.

- Results showed that the true causal subsystem was included in the diagnosis set in 82% of cases.

- The search space for diagnosis was effectively reduced in 73% of scenarios, improving efficiency.

Real-World Secure Water Treatment Dataset

- The approach successfully identified faulty subsystems in a complex industrial water treatment setting.

- Demonstrated practical applicability beyond simulations.

Related Research Landscape

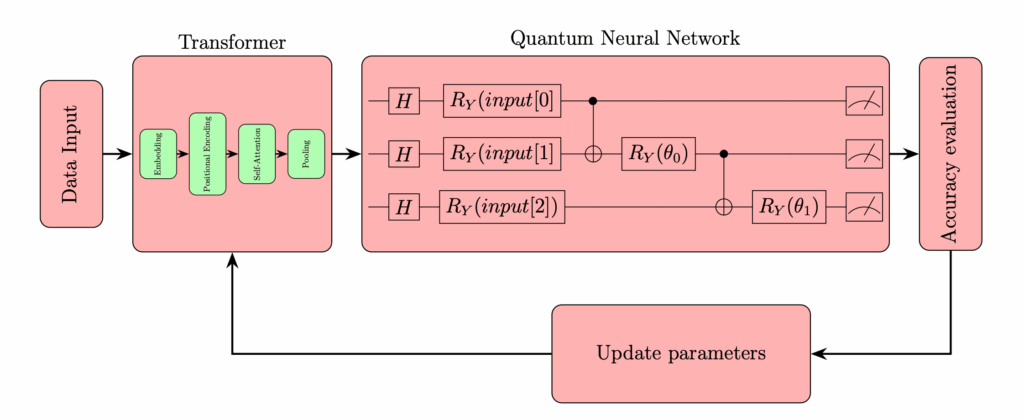

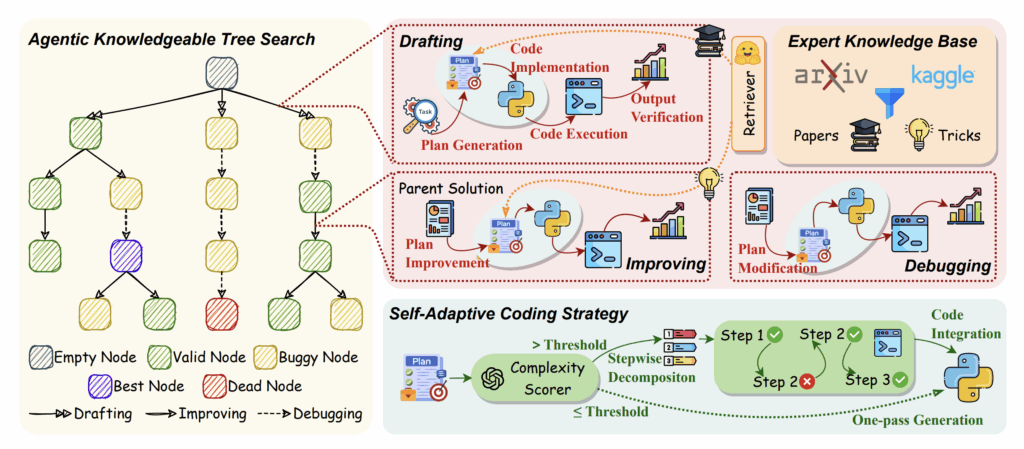

- Anomaly Detection: Deep learning models (transformers, graph neural networks, autoencoders) excel at detecting deviations but often lack root cause analysis.

- Fault Diagnosis: Traditional methods rely on detailed models or labeled faults, limiting scalability.

- Causality and Fault Propagation: Using causal graphs to model fault propagation is a powerful concept but often requires detailed system knowledge.

This work uniquely combines data-driven anomaly detection with minimal causal information to enable scalable, practical diagnosis.

Why This Matters

- Minimal prior knowledge: Reduces dependency on costly system modeling or fault labeling.

- Scalability: Suitable for large, complex CPSs with many sensors and subsystems.

- Practicality: Uses information commonly available in industrial settings.

- Improved diagnostics: Enables faster and more accurate root cause identification, aiding maintenance and safety.

Future Directions

- Extending to more diverse CPS domains with varying complexity.

- Integrating online learning for adaptive diagnosis in evolving systems.

- Enhancing causal graph extraction methods using data-driven or language model techniques.

- Combining with explainability tools to improve human trust and understanding.

Summary

Steude et al.’s novel approach presents a promising path toward effective diagnosis in large cyber-physical systems with minimal prior knowledge. By combining subsystem-level anomaly detection with a causal graph-based diagnosis algorithm, their method balances accuracy, efficiency, and practicality. This work opens new opportunities for deploying intelligent diagnostic systems in real-world industrial environments where detailed system models or labeled faults are scarce.

Paper: https://arxiv.org/pdf/2506.10613

If you’re interested in the intersection of AI, industrial automation, and fault diagnosis, this research highlights how data-driven methods can overcome longstanding challenges with minimal manual effort.