Recommender systems are everywhere — from suggesting movies on streaming platforms to recommending products in online stores. At the heart of these systems lies a challenge called matrix completion: predicting the missing ratings or preferences users might have for items. Recently, a new method called MCCL (Matrix Completion using Contrastive Learning) has been proposed to make these predictions more accurate and robust. Here’s a breakdown of what MCCL is all about and why it matters.

What’s the Problem with Current Recommendation Methods?

- Sparse Data: User-item rating matrices are mostly incomplete because users rate only a few items.

- Noise and Irrelevant Connections: Graph Neural Networks (GNNs), popular for modeling user-item interactions, can be misled by noisy or irrelevant edges in the interaction graph.

- Overfitting: GNNs sometimes memorize the training data too well, performing poorly on new, unseen data.

- Limited Denoising: Existing contrastive learning methods improve robustness but often don’t explicitly remove noise.

How Does MCCL Work?

MCCL tackles these issues by combining denoising, augmentation, and contrastive learning in a smart way:

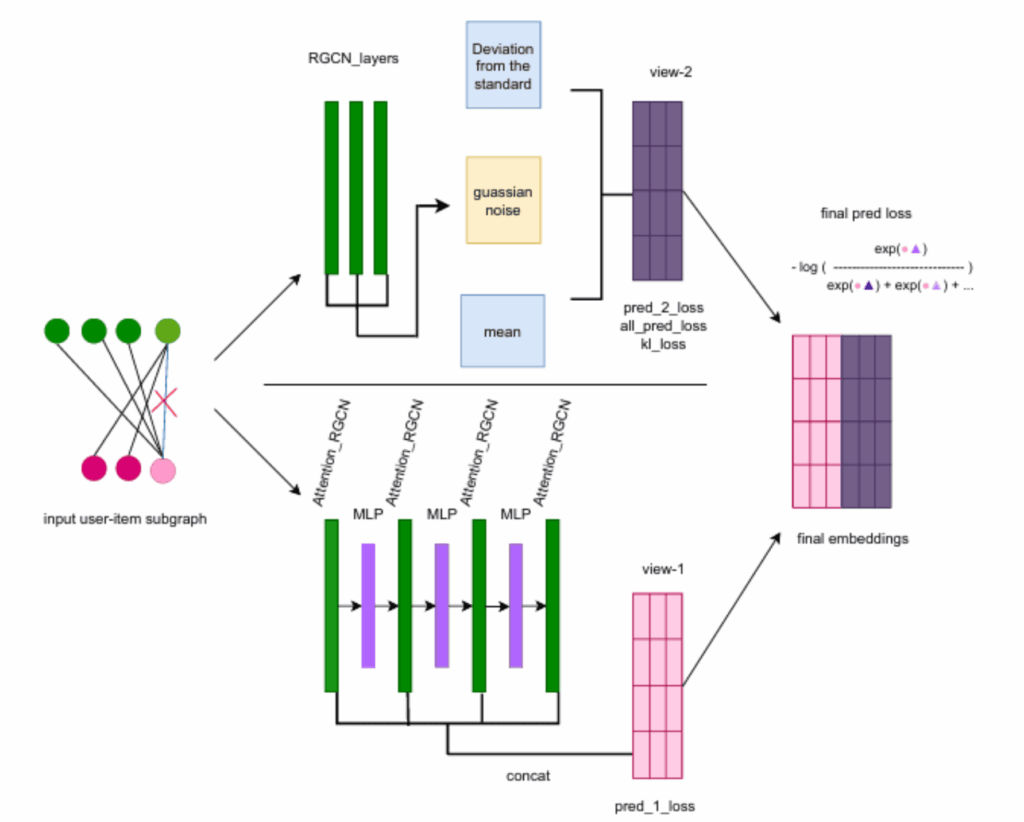

- Local Subgraph Extraction: For each user-item pair, MCCL looks at a small neighborhood around them in the interaction graph, capturing local context.

- Two Complementary Graph Views:

- Denoising View: Uses an attention-based Relational Graph Convolutional Network (RGCN) to weigh edges, reducing the influence of noisy or irrelevant connections.

- Augmented View: Employs a Graph Variational Autoencoder (GVAE) to create a latent representation aligned with a standard distribution, encouraging generalization.

- Contrastive Mutual Learning: MCCL trains these two views to learn from each other by minimizing differences between their representations, capturing shared meaningful patterns while preserving their unique strengths.

Why Is This Important?

- Better Prediction Accuracy: MCCL improves rating predictions by up to 0.8% RMSE, which might seem small but is significant in recommendation contexts.

- Enhanced Ranking Quality: It boosts how well the system ranks recommended items by up to 36%, meaning users get more relevant suggestions.

- Robustness to Noise: By explicitly denoising the graph, MCCL reduces the risk of misleading information corrupting recommendations.

- Generalization: The use of variational autoencoders helps the system perform well even on new, unseen data.

The Bigger Picture

MCCL represents a step forward in making recommender systems smarter and more reliable by:

- Combining the strengths of graph neural networks with self-supervised contrastive learning.

- Addressing common pitfalls like noise and overfitting in graph-based recommendation models.

- Offering a framework that can be extended to other graph-related tasks beyond recommendations.

Final Thoughts

If you’re interested in how AI and graph theory come together to improve everyday tech like recommendations, MCCL is a promising development. By cleverly blending denoising and augmentation strategies within a contrastive learning setup, it pushes the boundaries of what recommender systems can achieve.

Stay tuned for more innovations in this space — the future of personalized recommendations looks brighter than ever!