Modeling and generating realistic human activity patterns over space and time is a crucial challenge in fields ranging from urban planning and public health to autonomous systems and social science. Traditional approaches often rely on handcrafted rules or limited datasets, which restrict their ability to capture the complexity and variability of individual behaviors.

A recent study titled “A Study on Individual Spatiotemporal Activity Generation Method Using MCP-Enhanced Chain-of-Thought Large Language Models” proposes a novel framework that leverages the reasoning capabilities of Large Language Models (LLMs) enhanced with a Model Context Protocol (MCP) and chain-of-thought (CoT) prompting to generate detailed, realistic spatiotemporal activity sequences for individuals.

In this blog post, we’ll explore the key ideas behind this approach, its advantages, and potential applications.

The Challenge: Realistic Spatiotemporal Activity Generation

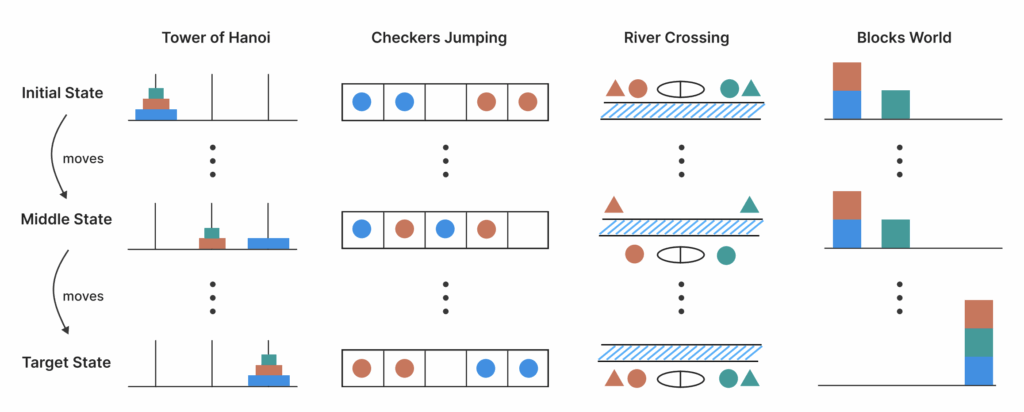

Generating individual activity sequences that reflect realistic patterns in both space and time is challenging because:

- Complex dependencies: Human activities depend on various factors such as time of day, location context, personal preferences, and social interactions.

- Long-range correlations: Activities are not isolated; they follow routines and habits that span hours or days.

- Data scarcity: Detailed labeled data capturing full activity trajectories is often limited or unavailable.

- Modeling flexibility: Traditional statistical or rule-based models struggle to generalize across diverse individuals and scenarios.

Leveraging Large Language Models with Chain-of-Thought Reasoning

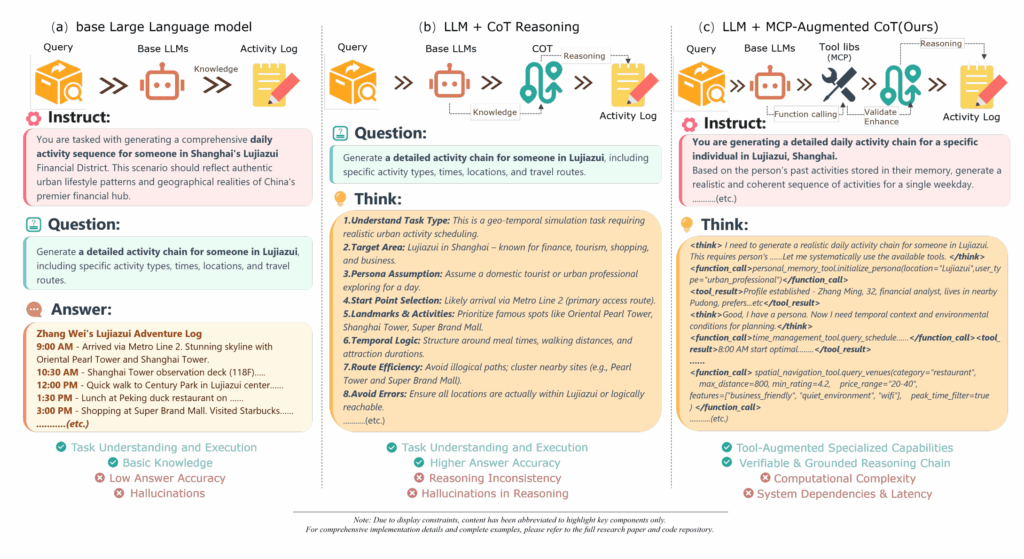

Large Language Models like GPT-4 have shown remarkable ability to perform complex reasoning when guided with chain-of-thought (CoT) prompting, which encourages the model to generate intermediate reasoning steps before producing the final output.

However, directly applying LLMs to spatiotemporal activity generation is non-trivial because:

- The model must handle structured spatial and temporal information.

- It needs to maintain consistency across multiple time steps.

- It should incorporate contextual knowledge about locations and activities.

Introducing Model Context Protocol (MCP)

To address these challenges, the authors propose integrating a Model Context Protocol (MCP) with CoT prompting. MCP is a structured framework that guides the LLM to:

- Understand and maintain context: MCP encodes spatial, temporal, and personal context in a standardized format.

- Generate stepwise reasoning: The model produces detailed intermediate steps reflecting the decision process behind activity choices.

- Ensure consistency: By formalizing context and reasoning, MCP helps maintain coherent activity sequences over time.

The Proposed Framework: MCP-Enhanced CoT LLMs for Activity Generation

The framework operates as follows:

- Context Encoding: The individual’s current spatiotemporal state and relevant environmental information are encoded using MCP.

- Chain-of-Thought Prompting: The LLM is prompted to reason through activity decisions step-by-step, considering constraints and preferences.

- Activity Sequence Generation: The model outputs a sequence of activities with associated locations and timestamps, reflecting realistic behavior.

- Iterative Refinement: The process can be repeated or conditioned on previous outputs to generate longer or more complex activity patterns.

Advantages of This Approach

- Flexibility: The LLM can generate diverse activity sequences without requiring extensive domain-specific rules.

- Interpretability: Chain-of-thought reasoning provides insight into the decision-making process behind activity choices.

- Context-awareness: MCP ensures that spatial and temporal contexts are explicitly considered, improving realism.

- Scalability: The method can be adapted to different individuals and environments by modifying context inputs.

Experimental Validation

The study evaluates the framework on synthetic and real-world-inspired scenarios, demonstrating that:

- The generated activity sequences exhibit realistic temporal rhythms and spatial patterns.

- The model successfully captures individual variability and routine behaviors.

- MCP-enhanced CoT prompting outperforms baseline methods that lack structured context or reasoning steps.

Potential Applications

- Urban Planning: Simulating realistic human movement patterns to optimize transportation and infrastructure.

- Public Health: Modeling activity patterns to study disease spread or design interventions.

- Autonomous Systems: Enhancing prediction of human behavior for safer navigation and interaction.

- Social Science Research: Understanding behavioral dynamics and lifestyle patterns.

Future Directions

The authors suggest several promising avenues for further research:

- Integrating multimodal data (e.g., sensor readings, maps) to enrich context.

- Extending the framework to group or crowd activity generation.

- Combining with reinforcement learning to optimize activity sequences for specific objectives.

- Applying to real-time activity prediction and anomaly detection.

Conclusion

This study showcases the power of combining Large Language Models with structured context protocols and chain-of-thought reasoning to generate detailed, realistic individual spatiotemporal activity sequences. By formalizing context and guiding reasoning, the MCP-enhanced CoT framework opens new possibilities for modeling complex human behaviors with flexibility and interpretability.

As AI continues to advance, such innovative approaches will be key to bridging the gap between raw data and meaningful, actionable insights into human activity patterns.

Paper: https://arxiv.org/pdf/2506.10853

Stay tuned for more insights into how AI is transforming our understanding and simulation of human behavior in space and time.