Imagine teaching a computer to recognize a new object after seeing just a handful of examples. This is the promise of few-shot learning, a rapidly growing area in artificial intelligence (AI) that aims to mimic human-like learning efficiency. But while humans can quickly grasp new concepts by understanding relationships and context, many AI models struggle when data is scarce.

A recent research breakthrough proposes a clever way to help AI learn better from limited data by focusing on conditional class dependencies. Let’s dive into what this means, why it matters, and how it could revolutionize AI’s ability to learn with less.

The Challenge of Few-Shot Learning

Traditional AI models thrive on massive datasets. For example, to teach a model to recognize cats, thousands of labeled cat images are needed. But in many real-world scenarios, collecting such large datasets is impractical or impossible. Few-shot learning tackles this by training models that can generalize from just a few labeled examples per class.

However, few-shot learning isn’t easy. The main challenges include:

- Limited Data: Few examples make it hard to capture the full variability of a class.

- Class Ambiguity: Some classes are visually or semantically similar, making it difficult to distinguish them with sparse data.

- Ignoring Class Relationships: Many models treat classes independently, missing out on valuable information about how classes relate to each other.

What Are Conditional Class Dependencies?

Humans naturally understand that some categories are related. For instance, if you know an animal is a dog, you can infer it’s unlikely to be a bird. This kind of reasoning involves conditional dependencies — the probability of one class depends on the presence or absence of others.

In AI, conditional class dependencies refer to the relationships among classes that influence classification decisions. For example, knowing that a sample is unlikely to belong to a certain class can help narrow down the correct label.

The New Approach: Learning with Conditional Class Dependencies

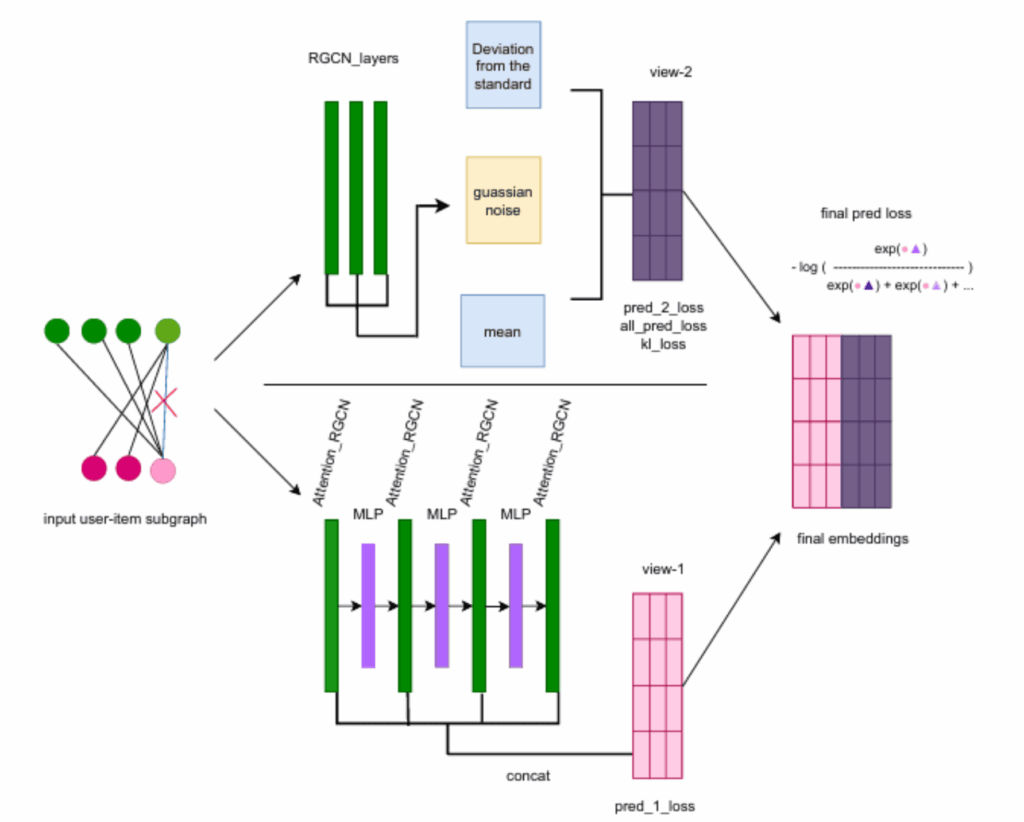

The paper proposes a novel framework that explicitly models these conditional dependencies to improve few-shot classification. Here’s how it works:

1. Modeling Class Dependencies

Instead of treating each class independently, the model learns how classes relate to each other conditionally. This means it understands that the presence of one class affects the likelihood of others.

2. Conditional Class Dependency Graph

The researchers build a graph where nodes represent classes and edges capture dependencies between them. This graph is learned during training, allowing the model to dynamically adjust its understanding of class relationships based on the data.

3. Graph Neural Networks (GNNs) for Propagation

To leverage the class dependency graph, the model uses Graph Neural Networks. GNNs propagate information across the graph, enabling the model to refine predictions by considering related classes.

4. Integration with Few-Shot Learning

This conditional dependency modeling is integrated into a few-shot learning framework. When the model sees a few examples of new classes, it uses the learned dependency graph to make more informed classification decisions.

Why Does This Matter?

By incorporating conditional class dependencies, the model gains several advantages:

- Improved Accuracy: Considering class relationships helps disambiguate confusing classes, boosting classification performance.

- Better Generalization: The model can generalize knowledge about class relationships to new, unseen classes.

- More Human-Like Reasoning: Mimics how humans use context and relationships to make decisions, especially with limited information.

Real-World Impact: Where Could This Help?

This advancement isn’t just theoretical — it has practical implications across many domains:

- Medical Diagnosis: Diseases often share symptoms, and understanding dependencies can improve diagnosis with limited patient data.

- Wildlife Monitoring: Rare species sightings are scarce; modeling class dependencies can help identify species more accurately.

- Security and Surveillance: Quickly recognizing new threats or objects with few examples is critical for safety.

- Personalized Recommendations: Understanding relationships among user preferences can enhance recommendations from sparse data.

Experimental Results: Proof in the Numbers

The researchers tested their approach on standard few-shot classification benchmarks and found:

- Consistent improvements over state-of-the-art methods.

- Better performance especially in challenging scenarios with highly similar classes.

- Robustness to noise and variability in the few-shot samples.

These results highlight the power of explicitly modeling class dependencies in few-shot learning.

How Does This Fit Into the Bigger AI Picture?

AI is moving towards models that require less data and can learn more like humans. This research is part of a broader trend emphasizing:

- Self-Supervised and Semi-Supervised Learning: Learning from limited or unlabeled data.

- Graph-Based Learning: Using relational structures to enhance understanding.

- Explainability: Models that reason about class relationships are more interpretable.

Takeaways: What Should You Remember?

- Few-shot learning is crucial for AI to work well with limited data.

- Traditional models often ignore relationships between classes, limiting their effectiveness.

- Modeling conditional class dependencies via graphs and GNNs helps AI make smarter, context-aware decisions.

- This approach improves accuracy, generalization, and robustness.

- It has wide-ranging applications from healthcare to security.

Looking Ahead: The Future of Few-Shot Learning

As AI continues to evolve, integrating richer contextual knowledge like class dependencies will be key to building systems that learn efficiently and reliably. Future research may explore:

- Extending dependency modeling to multi-label and hierarchical classification.

- Combining with other learning paradigms like meta-learning.

- Applying to real-time and dynamic learning environments.

Final Thoughts

The ability for AI to learn quickly and accurately from limited examples is a game-changer. By teaching machines to understand how classes relate conditionally, we bring them one step closer to human-like learning. This not only advances AI research but opens doors to impactful applications across industries.

Stay tuned as the AI community continues to push the boundaries of few-shot learning and builds smarter, more adaptable machines!

Paper: https://arxiv.org/pdf/2506.09205

If you’re fascinated by AI’s rapid progress and want to keep up with the latest breakthroughs, follow this blog for clear, insightful updates on cutting-edge research.