Hi there! I’m Sömnez Hüseyin and I’m a mathematician, a tech enthusiast, and a DIY AI researcher currently based in beautiful Istanbul, Turkey.

My journey into the world of bits and neurons started at Istanbul Technical University (ITU), where I earned my degree in Mathematics and Computer Science. That academic foundation gave me a deep appreciation for the logic behind the code, but my real passion lies in the “how-to”—taking cutting-edge artificial intelligence research papers and breathing life into them on my own hardware.

Why I’m Obsessed with AI & AGI

I firmly believe that Artificial Intelligence is not just a tool, but the defining frontier of our generation. Beyond the hype, I’m deeply fascinated by the quest for Artificial General Intelligence (AGI). Is it truly achievable? How close are we? I use this blog to document my experiments, share my theories, and spark conversations with fellow enthusiasts about where this road is taking humanity.

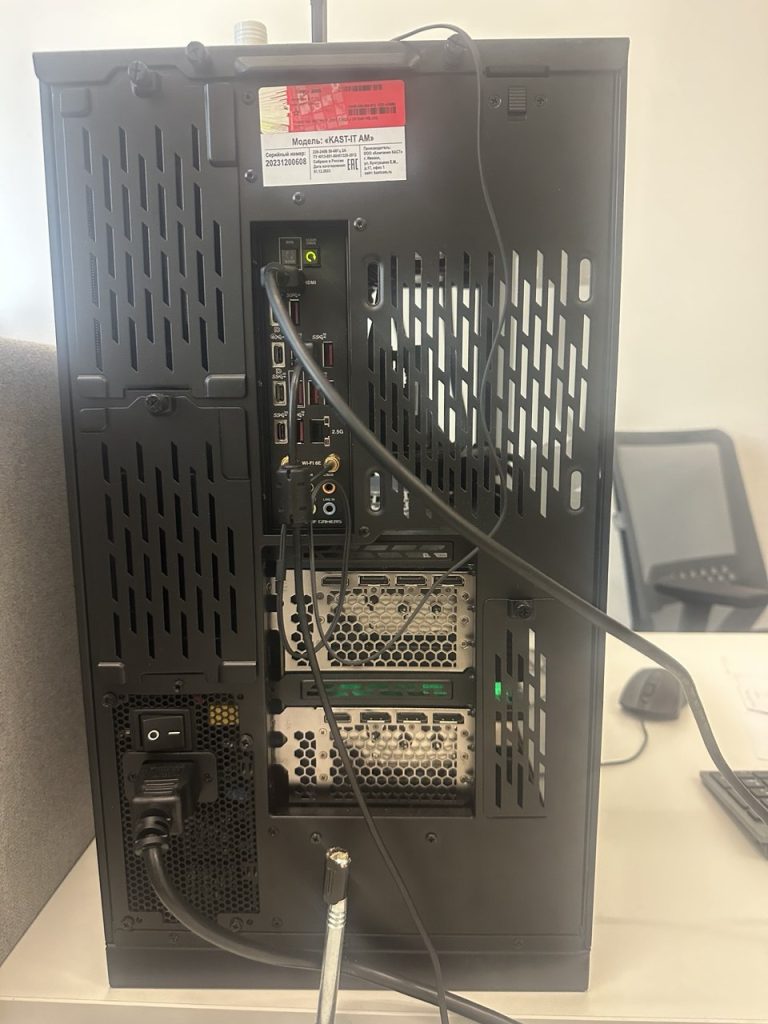

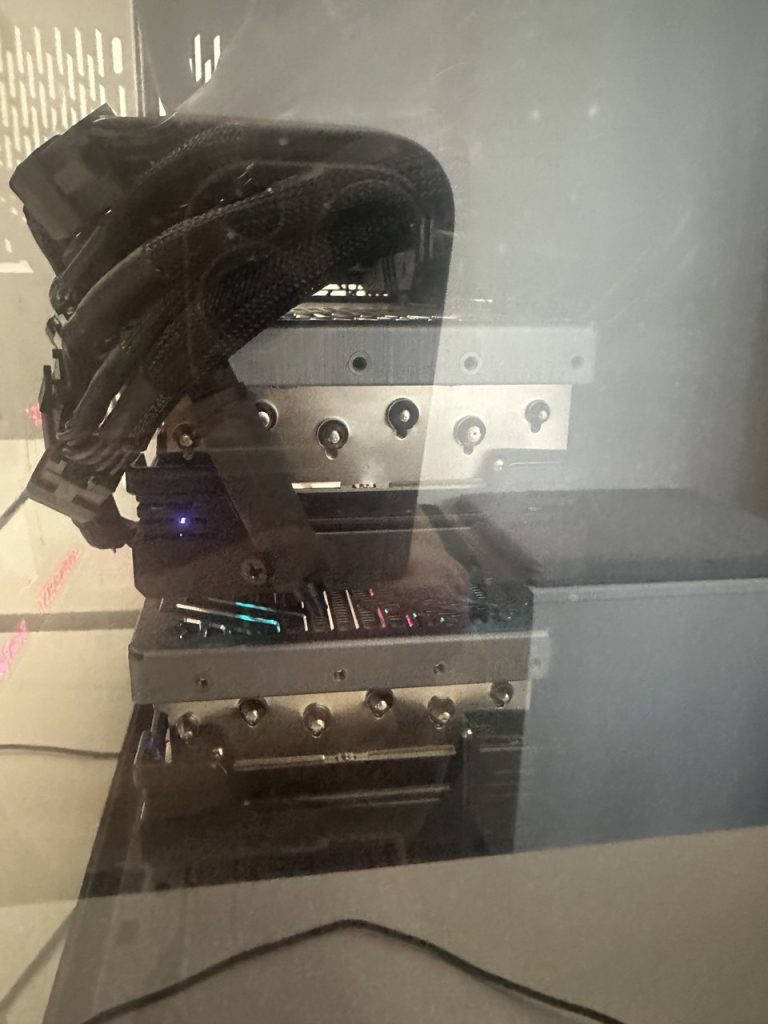

Inside the Rig: My DIY Artificial Intelligence Workstation

To reproduce modern papers (especially LLMs and Diffusion models), you can’t rely on basic hardware. I’ve built a custom workstation designed to handle heavy compute loads without the need for expensive cloud subscriptions.

The Specs:

- Compute: 10+ Core High-Performance CPU (Optimized for multi-threaded data preprocessing).

- Memory: 64GB RAM (Scalable to 128GB for processing massive datasets and high-parameter model caching).

- Graphics (The Heart): Dual Nvidia RTX 4080 GPUs (Leveraging 32GB combined VRAM for complex tensor operations).

- Storage: 2TB M.2 NVMe SSD for ultra-fast data throughput + 6TB HDD for long-term model weights and cold storage.

- Power & Stability: 1000W+ Gold-Rated PSU running on a native Ubuntu Linux environment.

Why this setup? n the rapidly evolving landscape of Artificial Intelligence, VRAM is king. While a single high-end card is sufficient for basic tasks, a dual-GPU configuration transforms a standard workstation into a localized AI laboratory. By running dual RTX 4080s, you unlock the ability to utilize Parallel Computing and Model Sharding. This allows you to split large-scale architectures—which would otherwise crush a consumer PC—across both GPUs, providing the memory overhead necessary to fine-tune specialized models (LLMs, Stable Diffusion) and run inference on high-parameter networks with minimal latency.

The Synergetic Core: CPU & RAM The 10+ core CPU acts as the essential “conductor,” handling heavy data augmentation, ETL (Extract, Transform, Load) processes, and managing the I/O flow to ensure the GPUs are never “starved” for data. Coupled with 64GB of scalable RAM, this system can hold massive datasets in-memory, drastically reducing training bottlenecks.

Storage & Throughput artificial intelligence development involves constant reading and writing of massive checkpoint files. The 2TB NVMe SSD ensures that model weights load into VRAM almost instantaneously, while the 6TB HDD provides the necessary “warehouse” for versioning large-scale models without cluttering the primary high-speed workspace.

The Linux Advantage Running on Ubuntu Linux is a strategic choice. It provides “bare-metal” control over the hardware, which is vital for optimized CUDA drivers and seamless Docker containerization. Unlike other operating systems, Ubuntu offers a low-overhead environment that allows for superior memory management and native support for the most advanced artificial intelligence libraries, making it the undisputed gold standard for professional developers.

My Verdict

This setup is engineered for the power user who needs to bridge the gap between “hobbyist” experimentation and “enterprise-level” model deployment. It offers the stability, thermal headroom, and raw TFLOPS required to push the boundaries of modern machine learning.

Memory: 64GB RAM (Scalable to 128GB for massive dataset processing).

Compute: 10+ Core High-Performance CPU

Storage: 2TB M.2 NVMe SSD for fast data throughput + 6TB HDD for long-term model storage

What You’ll Find Here

I don’t just talk about artificial intelligence; I build it. Whether you’re interested in how a specific architecture performs on consumer hardware or you want to debate the philosophical hurdles of AGI, you’re in the right place.

I’m here to show that you don’t need a multi-million dollar data center to contribute to the AI revolution—just some math, a bit of Python, and a very powerful power supply.

You may also read my new article about how I made my AI Home Lab.

If you have any questions regarding my research or want to discuss upcoming AI conferences, you can reach me via Facebook.