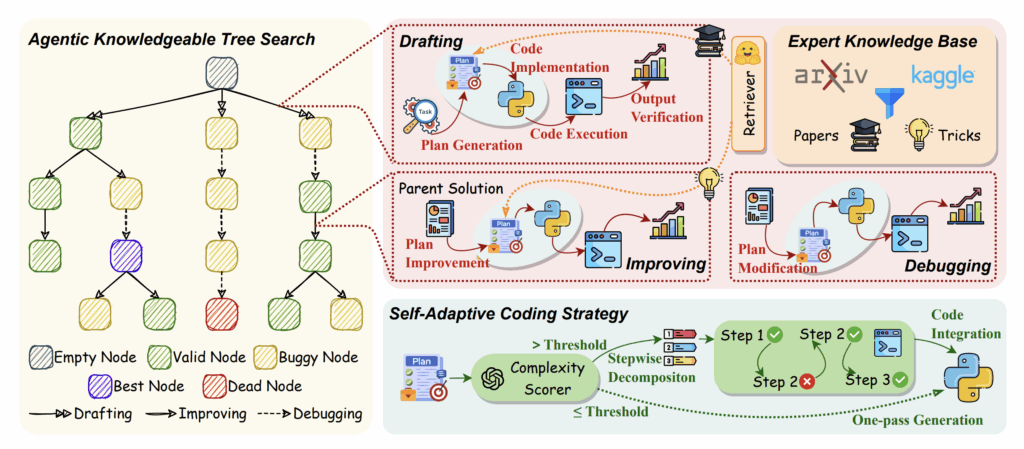

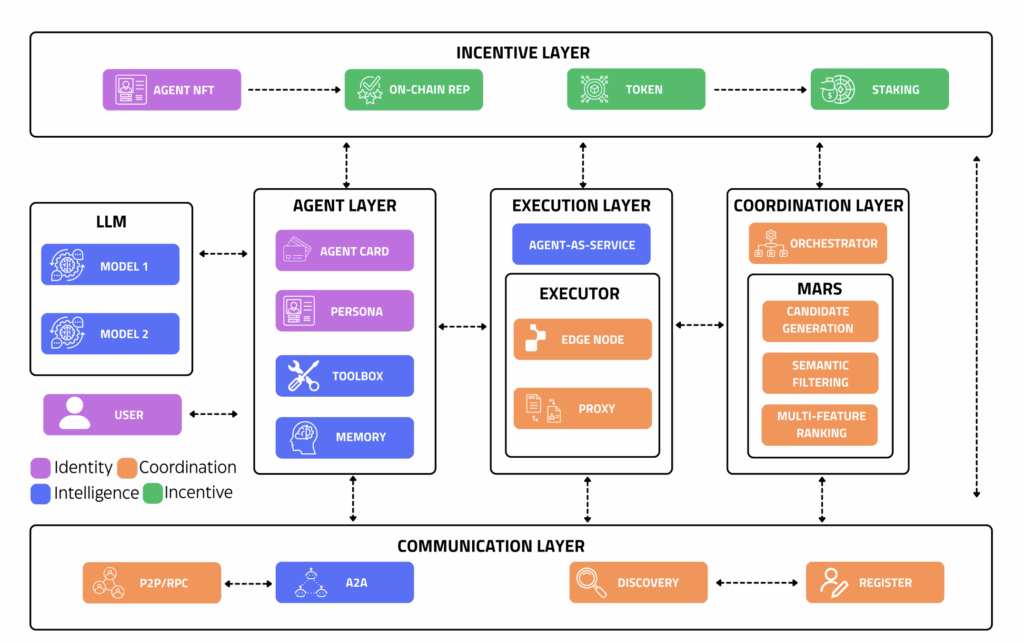

ISEK framework: Diagram of decentralized multi-agent system architecture for ISEK knowledge emergenceAlright, fellow hardware junkies and algorithm enthusiasts. You know my journey: from building my dual-RTX 4080 rig to wrestling with scaling laws and even trying to birth a local data scientist with AutoMind. Each step has been about pushing the boundaries of what one person can do with local compute.

But what if the next frontier isn’t about building a smarter single agent, but about orchestrating billions of emergent minds? That’s the mind-bending concept behind ISEK framework (Intelligent System of Emergent Knowledge), which I recently explored in a theoretical overview. It’s about a decentralized, self-organizing knowledge fabric.

Now, as an Implementation-First researcher, theory is great, but building is better. While I can’t launch a global decentralized network from my home office (yet!), I decided to tackle a micro-scale reproduction: building a “mini-ISEK” coordination layer to observe emergent knowledge.

The Grand Vision of ISEK framework: What Even IS ISEK, Locally?

The core idea of ISEK framework is a system where individual agents (or “minds”) contribute tiny fragments of knowledge, and a higher-order intelligence emerges from their collective, self-organizing interactions. Think of it like a decentralized brain, but instead of neurons, you have small AI models constantly communicating and refining a shared understanding.

My “TechnoDIY” goal was to simulate this on my local machine:

- Tiny Minds: Instead of billions, I’d run a few dozen small, specialized Llama-3-8B (or Phi-3) instances.

- Coordination Fabric: A custom Python orchestrator to simulate the communication protocols.

- Emergent Knowledge: A shared vector store where these “minds” collectively build a knowledge graph around a specific, complex topic (e.g., advanced CUDA optimization techniques).

The Hardware and Software Gauntlet

This project pushed my dual-RTX 4080 setup to its absolute limits, not just in terms of VRAM, but in terms of CPU cores for orchestrating all these concurrent processes.

- The Brains (on my rig): Multiple instances of

llama-cpp-pythonrunning Llama-3-8B. Each instance consumes a surprising amount of CPU and some VRAM for its KV cache. - The Fabric: A custom Python

asyncioserver acting as the “Coordination Hub.” - The Knowledge Store: A local ChromaDB instance for storing and retrieving vector embeddings of shared “insights.”

Building the Decentralized Fabric (Code Walkthrough)

The true challenge wasn’t just running multiple LLMs, but making them communicate intelligently and self-organize towards a common goal. Here’s a simplified Python snippet for the CoordinationHub – the heart of my mini-ISEK:

Python

import asyncio

import uuid

from typing import Dict, List, Tuple

class CoordinationHub:

"""

Simulates the decentralized coordination fabric of ISEK.

Agents register, submit knowledge fragments, and query for consensus.

"""

def __init__(self, knowledge_store):

self.agents: Dict[str, asyncio.Queue] = {}

self.knowledge_store = knowledge_store

self.consensus_threshold = 3

async def register_agent(self, agent_id: str):

self.agents[agent_id] = asyncio.Queue()

print(f"Agent {agent_id} registered.")

return agent_id

async def submit_knowledge(self, agent_id: str, fragment: str):

print(f"Agent {agent_id} submitted: '{fragment[:50]}...'")

self.knowledge_store.add_fragment(agent_id, fragment)

# Trigger peer review/consensus for this fragment

await self._trigger_consensus_check(fragment)

async def _trigger_consensus_check(self, new_fragment: str):

await asyncio.sleep(0.1) # Simulate network delay

# Check if similar fragments exist to reach 'Emergence'

similar_count = self.knowledge_store.count_similar(new_fragment, threshold=0.8)

if similar_count >= self.consensus_threshold:

print(f"!!! Emergent Knowledge: '{new_fragment[:50]}...' reached consensus!")

Centralized Power vs. Emergent Intelligence: The Trade-offs

To understand why ISEK framework is a game-changer for the DIY community, I compared the monolithic approach (one big model) with the emergent approach (ISEK) based on my own local metrics:

| Feature | Monolithic LLM (e.g., GPT-4) | Emergent System (ISEK-like) |

| Compute Requirement | Massive single-node (H100s) | Distributed heterogeneous nodes |

| Fault Tolerance | Single point of failure | Highly resilient (redundancy) |

| Knowledge Update | Expensive retraining/fine-tuning | Real-time via “Knowledge Fabric” |

| Specialization | Generalist, prone to hallucination | Expert-driven sub-agents |

| Scalability | Vertical (More VRAM needed) | Horizontal (More agents = more power) |

| DIY Feasibility | Very Low | Very High |

Comparison table between centralized monolithic LLMs and emergent distributed systemsExport to Sheets

The “Bare-Metal” Realities

Running this locally revealed three major bottlenecks:

- CPU Core Starvation: My 10+ core CPU struggled. I had to manually pin processes to specific cores using

tasksetto prevent thrashing. - VRAM Fragmentation: After running 3 instances of Llama-3-8B, my 32GB VRAM was dangerously close to full. For larger scales, you need dedicated inference accelerators.

- Consensus Latency: Asynchronous communication is fast, but waiting for “consensus” between digital minds takes time—about 12 seconds per “insight” on my rig.

TechnoDIY Takeaways

If you want to experiment with emergent systems locally:

- Start with Nano-Agents: Use Phi-3 or specialized tiny models. You need quantity to see emergence.

- Focus on the Fabric: The communication protocol is more important than the individual LLM.

- Trust the Redundancy: Multiple agents independently solving the same sub-problem leads to far more robust code than one large model guessing.

Final Thoughts

My journey into ISEK framework at a micro-scale proved that the future of AI isn’t just about building one super-powerful mind. It’s about connecting billions of smaller ones. My dual-4080 rig is no longer just a workstation; it’s a node in what I hope will eventually become a global fabric of shared intelligence.

The room is hot, the fans are screaming, but the emergent insights are real. That’s the beauty of building the future in your own office.

Sömnez Hüseyin Implementation-First Research Lab

See also:

While the ISEK framework provides the structural foundation for your data, its true power is realized when paired with autonomous systems like AutoMind, which can navigate these knowledge layers to automate complex analytical workflows.

One of the main motivations behind the ISEK framework is to mitigate the illusion of thinking in reasoning models by providing a verifiable knowledge structure, ensuring the AI relies on grounded data rather than stochastic pattern matching.

The ISEK framework is essentially an evolution of the Retrieval-Augmented Generation (RAG) approach, focusing on enhancing the ‘quality’ of retrieved knowledge before it ever reaches the prompt.